A technical SEO checklist that is specifically designed for agencies to ensure that their SEO workflows are highly repeatable and functional.

JetOctopus sponsored this publication. The sponsor’s opinions are those that are expressed in this article.

When undertaking substantial projects or managing websites with hundreds to thousands of pages, it is imperative to implement sophisticated technical SEO strategies.

Challenges associated with large websites include the high-stakes competition in sustaining rankings, vast site architectures, and dynamic content.

Enhancing the technical SEO capabilities of your team can assist in the development of a more robust value proposition, which will guarantee that your clients acquire an initial advantage and continue to engage with your agency.

In light of this, the following is a succinct checklist that delineates the most critical aspects of advanced technical SEO, which can guide your clients to unprecedented performance in the search engine results pages (SERPs).

Advanced Indexing And Crawl Control

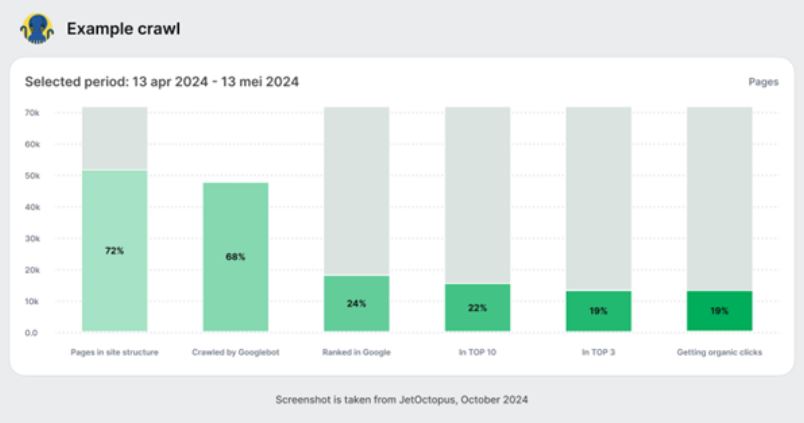

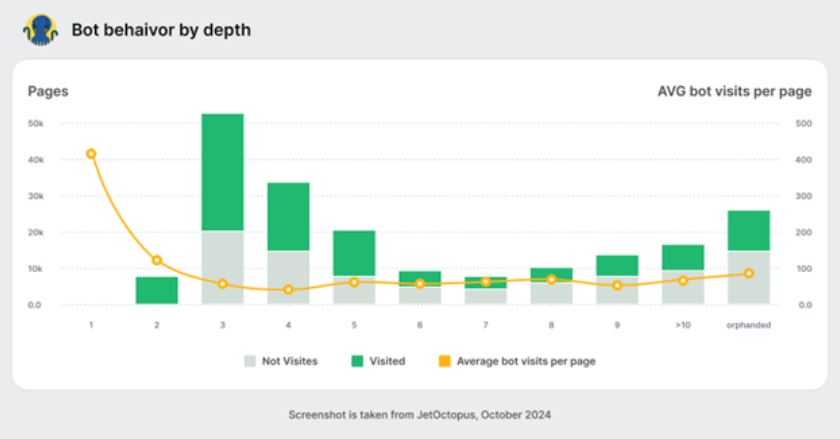

Foundational to effective technical SEO is the optimization of search engine crawl and indexation. The process of effectively managing your crawl budget commences with the analysis of log files, a method that provides direct insights into the interactions between search engines and the websites of your clients.

A log file analysis is beneficial:

- Crawl Budget Management: A critical component of ensuring that Googlebot indexes and crawls your most valuable pages. Analysis of log files reveals the number of pages crawled on a daily basis and whether critical sections are overlooked.

- Non-Crawled Page Detection: Provides a clear understanding of the necessary improvements by identifying pages that Googlebot ignores due to issues such as slow loading times, poor internal linking, or unappealing content.

- Understand Googlebot Behavior: Comprehend the Conduct of the Googlebot Understand the actual content that Googlebot crawls on a daily basis. Technical issues on your website, such as auto-generated thin, trashy pages, may be indicated by spikes in the crawl expenditure.

To achieve this, the integration of your SEO log analyzer data with GSC crawl data offers a comprehensive understanding of the site’s functionality and search engine interactions, thereby improving your capacity to direct crawler behavior.

Subsequently, organize robots.txt to prevent search engines from accessing or indexing low-value add-ons or admin areas, while simultaneously permitting them to access and index primary content. Alternatively, the x-robots-tag, an HTTP header, can be employed to regulate indexing at a more precise level than robots.txt. It is particularly advantageous for non-HTML files, such as PDFs or images, that are incapable of making use of robot meta elements.

The approach to sitemaps for large websites is distinct from what you may have encountered. It is almost illogical to include millions of URLs in the sitemaps and expect the Googlebot to crawl them. Instead, generate sitemaps with new products, categories, and pages on a daily basis. It will facilitate the discovery of new content by Googlebot and enhance the efficiency of your sitemaps. For example, in order to enhance indexing, DOM.RIA, a Ukrainian real estate marketplace, implemented a strategy that involved the development of mini-sitemaps for each city directory. Enhanced content visibility and click-through rates from the SERPs were the result of this approach, which considerably increased Googlebot visits (by over 200% for key pages).

Site Architecture And Navigation

The overall SEO performance is improved by an intuitive site structure, which facilitates the efficient navigation of the site for both users and search engine crawlers.

In particular, a flat site architecture reduces the number of steps necessary to access any page on your website, thereby simplifying the process of crawling and indexing your content for search engines. It improves the efficacy of site crawling by decreasing the depth of critical content. This enhances the visibility of a greater number of pages in search engine indexes.

Therefore, it is advantageous to arrange (or reorganize) content in a shallow hierarchy, as this enables more efficient access and more equitable distribution of link equity throughout your website.

Particularly for enterprise eCommerce clients, guarantee that dynamic parameters in URLs are appropriately managed. Utilize the rel=” canonical” link element to direct search engines to the original page, thereby averting parameters that may lead to duplicate content.

In the same way, product variations (such as color and size) can generate multiple URLs with comparable content. The general rule is to apply the canonical tag to the preferable URL version of a product page to ensure that all variations point back to the primary URL for indexing, although the specific case may dictate otherwise. If there is a substantial number of pages on the website that Google ignores non-canonical content and includes it in the index, it may be worthwhile to reevaluate the canonicalization strategy.

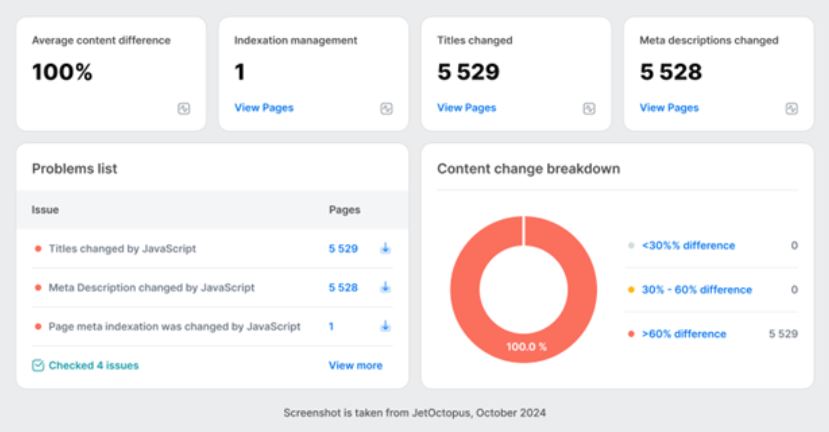

JavaScript SEO

JavaScript (JS) is a critical component of contemporary web development, as you are aware. It not only improves the functionality and interactivity of websites but also presents distinct SEO challenges. It is crucial to ensure that JavaScript SEO is effective, even if you are not directly involved in development.

Critical rendering path optimization is the most important factor in this context.

The browser’s sequence of stages to convert HTML, CSS, and JavaScript into a rendered web page is referred to as the critical rendering path. It is essential to optimize this path in order to increase the speed at which a page is visible to consumers.

This is the method to be followed:

- Decrease the quantity and size of the resources necessary to present the initial content.

- Minify JavaScript files to decrease their loading time.

- To expedite the rendering of pages, prioritize the encoding of content that is located above the fold.

If you are working with Single Page Applications (SPAs), which depend on JavaScript to access dynamic content, you may need to address the following:

- Indexing Issues: Search engines may perceive a vacant page due to the dynamic loading of content. Ensure that the content is visible to search engines upon page loading by implementing Server-Side Rendering (SSR).

- Issues with Navigation: The absence of conventional link-based navigation in SPAs frequently influences the manner in which search engines interpret the structure of the site. Utilize the HTML5 History API to enhance crawlability and preserve conventional navigation capabilities.

Another technique that is beneficial for JavaScript-heavy websites is dynamic rendering, which serves inert HTML versions to search engines while presenting interactive versions to users.

Nevertheless, verify that the browser console is devoid of errors to ensure that the page is completely rendered with all necessary content. Additionally, ensure that pages load rapidly, with a preference for a few seconds, to mitigate bounce rates and prevent user aggravation (no one enjoys a lengthy loading spinner).

Test and monitor the efficacy of your website’s rendering and web vitals using tools such as GSC and Lighthouse. Ensure that the content that is rendered matches the content that users see on a regular basis to maintain consistency in the content that search engines index.

Optimizing For Seasonal Trends

Consumer behavior and, as a result, search queries are influenced by seasonal trends in the retail eCommerce sector.

For these initiatives, it is imperative to consistently modify your SEO strategies to ensure that they are in accordance with any product line updates.

Special consideration must be given to seasonal product variations, such as holiday-specific items or summer/winter editions, to guarantee that they are visible at the appropriate times:

- Content Updates: Prior to the commencement of the season, revise product descriptions, meta tags, and content to include seasonal keywords.

- Seasonal Landing Pages: Develop and optimize landing pages that are specifically designed for seasonal products, ensuring that they are linked to the primary product categories.

- Ongoing Keyword Research: Conduct ongoing keyword research to optimize new product categories and capture changing consumer interests.

- Technical SEO: Conduct routine monitoring of crawl errors, guarantee that new pages are mobile-friendly and accessible, and guarantee that they render quickly.

Conversely, the maintenance of site quality and the retention of SEO value are equally dependent on the management of discontinued products or obsolete pages:

- Assess Page Value: Implement consistent content audits to determine whether a page continues to be valuable. A page may not be worth maintaining if it has not received any traffic or a bot visit in the past half-year.

- 301 Redirects: Utilize 301 redirects to transfer SEO value from outdated pages to pertinent extant content.

- Prune Content: Optimize the structure and user experience of the site by eliminating or consolidating underperforming content to concentrate authority on more impactful pages.

- Pages That Are Out of Stock But Are Still Informative: Maintain the informative nature of pages for products that are seasonally unavailable by including availability dates or links to related products.

In other words, optimizing for seasonal trends entails effectively managing the transition periods and preparing for high-traffic periods. This ensures a streamlined site experience for your clients and sustained SEO performance.

Structured Data And Schema Implementation

Rich excerpts are a potent method for increasing the click-through rate (CTR) and increasing the visibility of a website in search engine results pages (SERPs) through the use of structured data in schema.org markup.

With advanced schema markup, you can present more specific and detailed information in SERPs, surpassing the basic implementation. In your subsequent client campaign, take into account the following schema markups:

- Nested Schema: Employ nested schema objects to furnish more comprehensive information. One example is the inclusion of nested Offer and Review schemas in a Product schema to display prices and reviews in search results.

- Event Schema: To facilitate the display of rich excerpts that directly display event details in SERPs, clients promoting events may implement an event schema that includes nested attributes such as startDate, endDate, location, and offers.

- How-To Pages and Frequently Asked Questions: Incorporate FAQPage and HowTo schemas into pertinent pages to offer direct responses in search results.

- Prices, Reviews, and Ratings: Display star ratings and reviews on product pages by implementing the AggregateRating and Review schema. Utilize the Offer schema to provide pricing information, thereby increasing the appeal of the listings to prospective purchasers.

- Availability Status: Utilize the ItemAvailability schema to indicate the stock status, which can enhance the urgency and probability of a purchase from SERPs.

- Blog Enhancements: To optimize the presentation of blog articles on content-heavy websites, implement an Article schema that includes attributes such as heading, author, and date published.

Utilize Google’s Structured Data Testing Tool to evaluate the structured data on your pages and detect any errors or warnings in your schema implementation. Additionally, utilize Google’s Rich Results Test to obtain feedback on the potential appearance of your page in SERPs with the structured data that has been implemented.

Conclusion

Enterprise-level websites necessitate a more comprehensive examination from a variety of perspectives due to their extensive SEO history and legacy.

We trust that this mini checklist will serve as a foundation for your team to undertake a fresh perspective on your existing and new consumers, thereby facilitating the delivery of exceptional SEO results.